Parts of a neural network

As I just said, this section will explain the different parts of a neural network. A lot of different types of networks exist and they all work differently but most networks focus on the same four parts:

- Inputs

- Neuron

- Synapses

- Outputs

Inputs

The inputs, as the name kind of suggests, are the part of the network that take in data. This part is vital to neural networks because they have to learn from something. You probably couldn't just fly a rocket to the moon right now but after reading up on it and watching someone do it, you might at least have a better chance of not dying. In the same way, a neural network can't write cake recipes without reading through a couple hundred pre-existing cake recipes and learning from these what a cake actually is. So, the inputs give the network the data that it then learns from.

Neuron

The neuron is the main part of the network. This is where the calculations go down. This is the chonky fonky. The big boi. The godfather. El numero uno. Mister big. Anyways, the job of the neuron is to take in signals from the inputs and add them together. So, if you have 2 inputs in the network, and one says the weather yesterday was 30° and another input that says the air humidity was around 10%, the neuron will add this data together. So really, all the neuron does is just add up everything it's recieving.

Synapses

Okay I didn't tell you one thing. There's another important part that comes before the neuron. Bear with me 🐻. In between the inputs and the neuron come the synapses, which you can kind of see as cables or pipes that move information across a neural network. Now one handy thing about synapses (and this is the main thing that gives neural networks their ability to learn and adjust themselves) is that they can change how much data goes through them.

If you want to go back to the previous example I mentioned when I said that synapses are like pipes, you can see this "adjustability" as faucets on the pipes that control how much water flows through them. This is helpful because some values may not be super important for the network to make its prediction. For example, if we go back to the example of the weather-predicting network: let's say we have 3 inputs: temperature, humidity, and estimated number of clouds in the sky. In this case, the number of clouds in the sky doesn't necesarrily have a direct relation to the weather the next day, so the synapses that connect this input to the synapses will be relatively small, meaning that the signals from that neuron will be made smaller and therefore not have a huge effect on the network's prediction. The next section will go over how a network automatically changes these values to be able to reach its most accurate prediction and therefore learn to accomplish tasks.

Outputs

As the name suggests, outputs are the final part of a neural network which present the results of its calculations. Once the network has done all the other stuff: get inputs, make a prediction via the neuron(s), etc. it outputs its calculation. If you look at the comic on the previous page, this is the panel on the bottom left.

Let's go over the parts and what they do in order one more time.

- Inputs: give the network data from which it will learn

- Synapses: multiply the values (signals) of numbers coming from the inputs with some value to change the size of said signals, affecting how much these signals affect the network's prediction. Bigger values have a larger influence on the network's finaly prediction, while smaller ones have less of an influence. This will be explained in greater detail in the next section.

- Neurons: add up all incoming signals

- Outputs: output the prediction of the network (note: often there will be some synapses that go between the neuron and the output)

In groups, these parts are called layers; the neuron layer (or hidden layer), the input layer, the synapse layer, and the output layer. To make all this clearer, you can look at the following diagrams of some neural networks (the function always goes from the left to the right, so from the inputs to the outputs, as explained before). Try to relate the above list of parts and their functions to the diagrams of the networks to figure out how they may work.

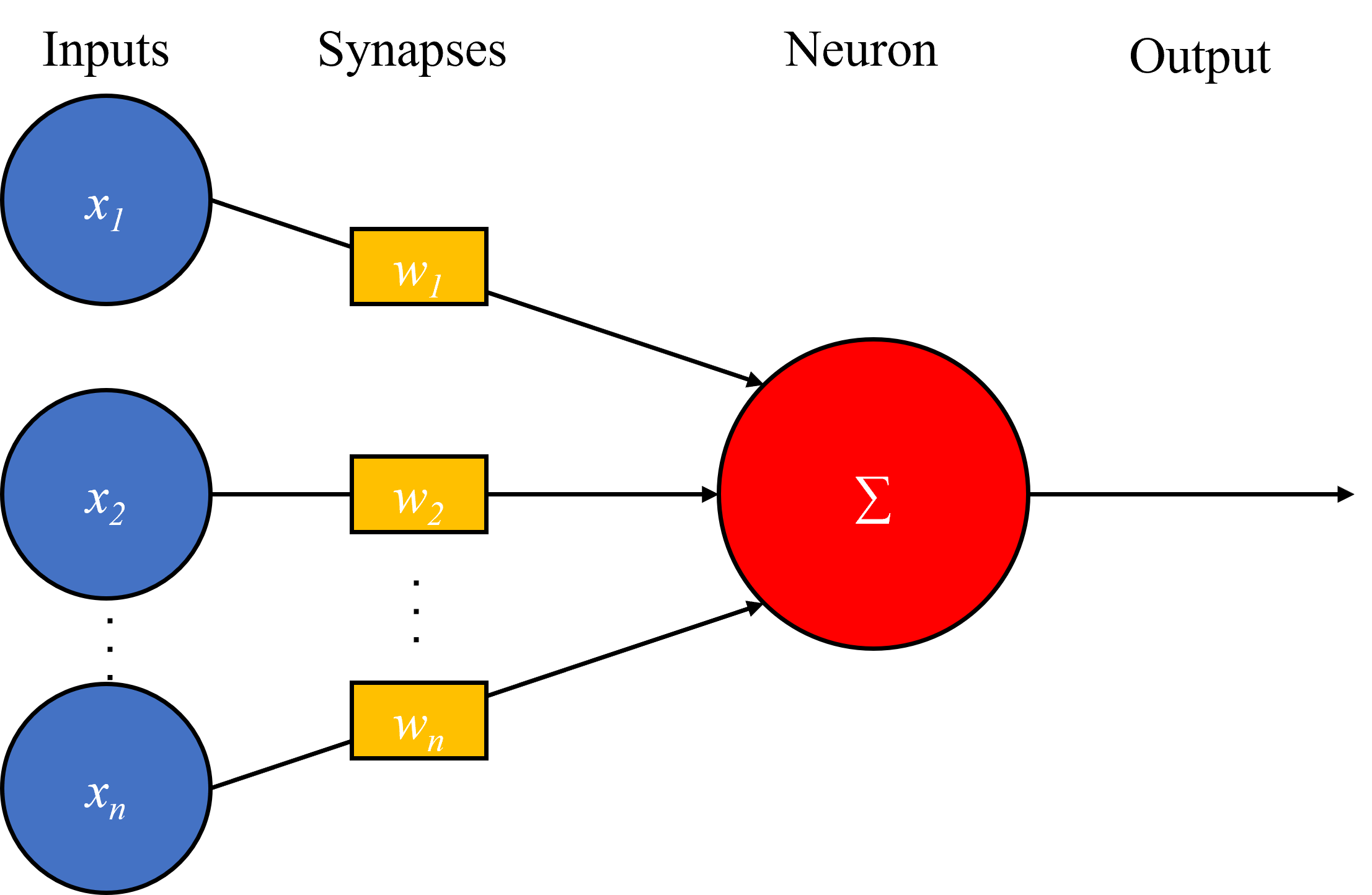

Diagram of a network with 3 inputs, 3 synapses (all neurons in a network are always connected to all inputs by the synapses), and one neuron, which is the output.

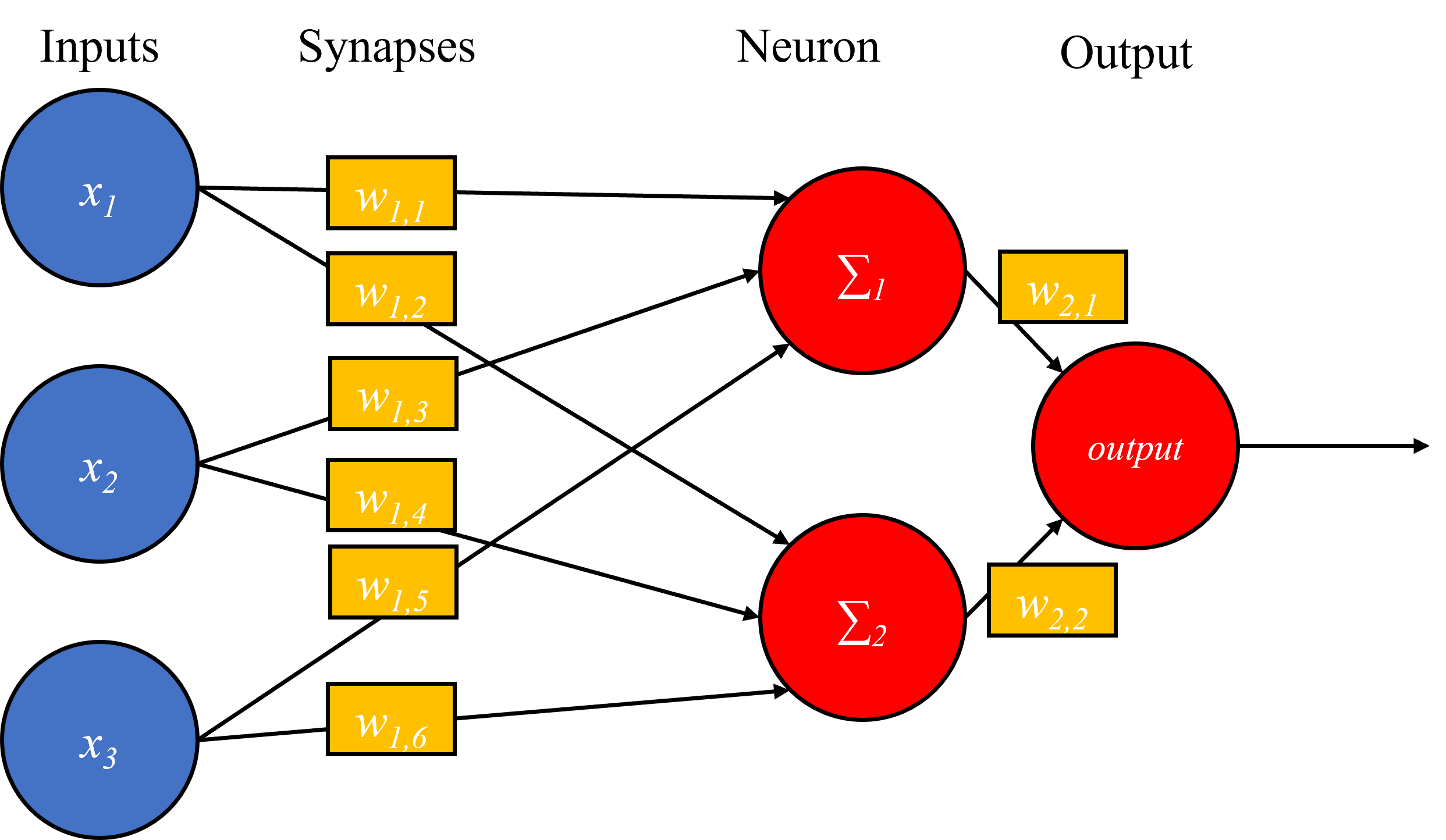

A larger network with 3 inputs, 8 synapses, 2 neurons, and one output (the finaly neuron acts as an output, summing up the incoming signals from the primer 2 neurons via 2 synapses).

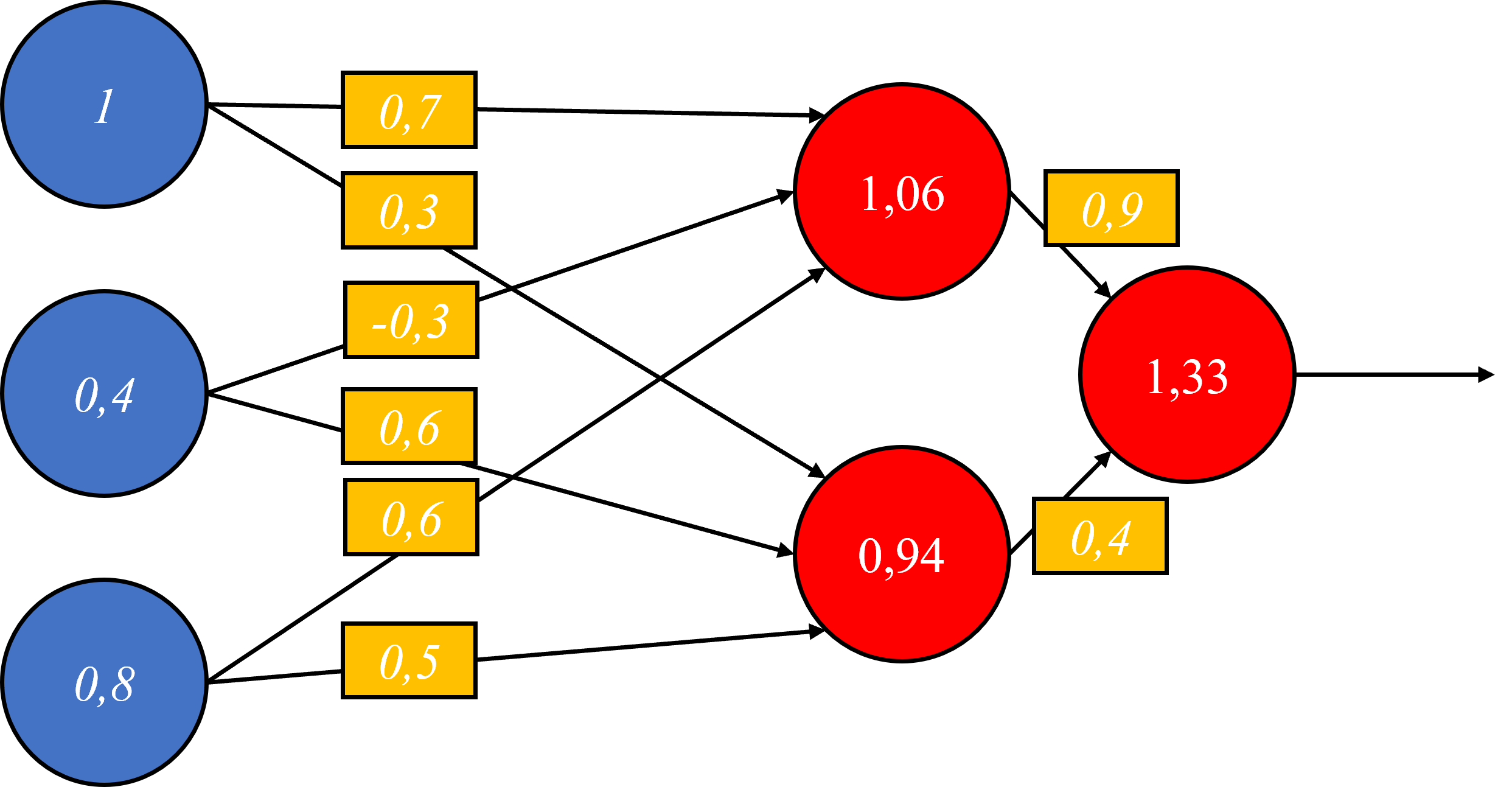

Diagram of the same network, now with mathematical values for each part of the network. Notice how the synapses are multiplied with the inputs and then added up by the neurons.

As can be seen from the final diagram, all parts of a network can (and, in programming), are presented variables which take on numerical values. The numerical values of synapses are known as (synaptic) weights.

So, to summarize:

In a neural network, there are 4 main parts, which can each be represented by numbers; the inputs, the synapses, the neurons, and the outputs. Typically, the inputs connect to the neurons via synapses, which then either lead to other neurons via synapses or to the output(s) (somtimes there are also synapses between the final layer of neurons and the outputs when fewer outputs are needed or when the values need to be compounded). The inputs relay information to the network which is used to make a prediction. The synapses change the size of these inputs and then send those signals to the neurons, which sum up all the incoming signals. The outputs show the result of the network's prediction.